This blog post is a proof of concept (POC) for a homelab and does NOT implement best practices for an enterprise environment. Use mTLS for communication between Logstash and Filebeat.Setup Filebeat to use client/leaf certificate to authenticate itself to Logstash.Setup Logstash to use the intermediate certificate to authenticate clients.Generate leaf certificates for logging clients.Generate an intermediate certificate for Logstash.

Lastly, I will cover the Python script I created to automate constructing this logging certificate chain of trust.

Logstash injest filebeats output how to#

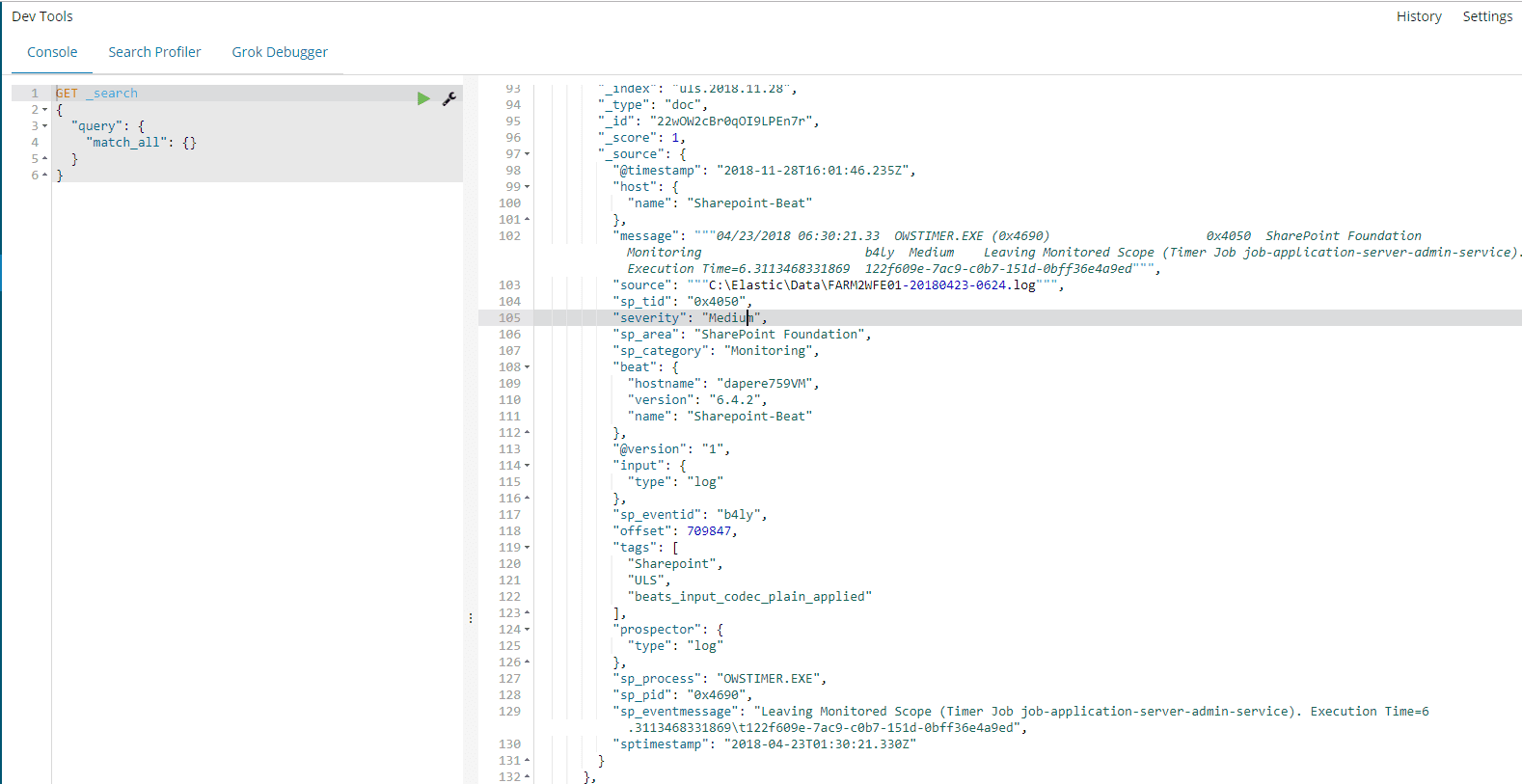

The step-by-step instructions in this post, will demonstrate how to create the certificate chain of trust using Vault. The purpose of this blog post is to provide instructions on how to setup Logstash and Filebeat with mutual TLS (mTLS). Finally, the document_type option uses the value from the event metadata (that is set by our Filebeat configuration) to set the document type.Do you know if your Filebeat client is connecting to a rogue Logstash server? Do you know if your Logstash server is accepting random logs from random devices? If you have answered “I don’t know” to either of these questions then this blog post is for you. This means a new index will be written for every day. The value given below specifies that indexes will have dynamic names, using the name of the beat plugin taken from the metadata of the event and a daily timestamp. The index option allows us to define the name of the index to which records from this output should be written. The option after the hosts list sets the manage_template option to false since we will be using a custom template and we don’t want Logstash to overwrite our customizations. Here we configure the address of the Elasticsearch node and a few other settings. Once Logstash is done parsing the event, it will send it’s output to Elasticsearch using the elasticsearch output plugin. The output section is pretty straight-forward. Each filter section begins with this if statement that drops any message beginning with a ‘#’ character.Ĭonvert => This plugin uses a comma as a delimiter by default, but it also allows you to set a custom delimiter, which is exactly what I needed (my logs use the default space delimited format).īefore getting to any of this though, we have to make sure to ignore the comments included at the beginning of Bro log files. The use of predictable delimiters means we can make use of the csv filter plugin. By default the fields are space delimited, though custom delimiters can also be set.

Either way, each log includes a definition of each field at the top of the file. This is where fields are created and populated.īro logs follow a predicatable format, though fields may be ordered differently between version or based on customizations. The filter section is where available filter plugins are used to parse through each message Logstash receives. The filter section is where the real work happens. Here the two options set are the host IP and port on which to listen for Filebeat data.

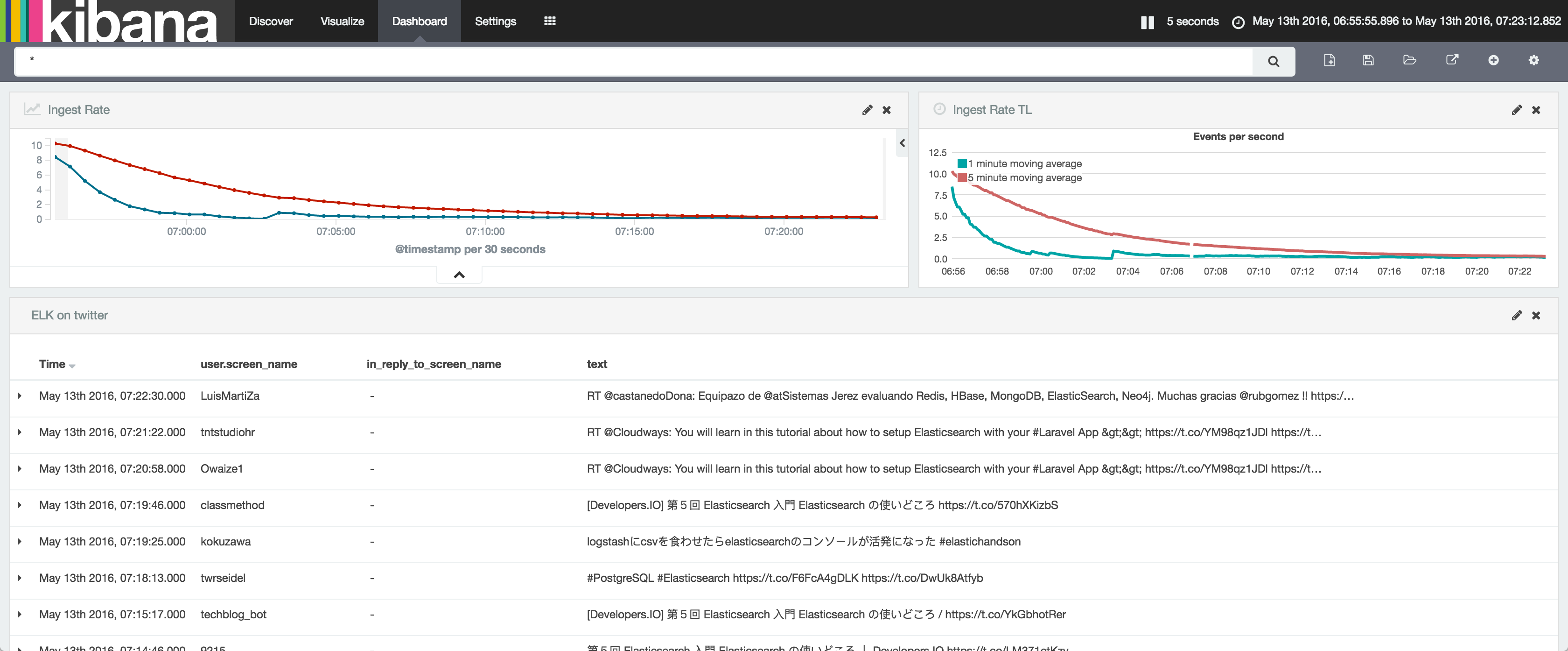

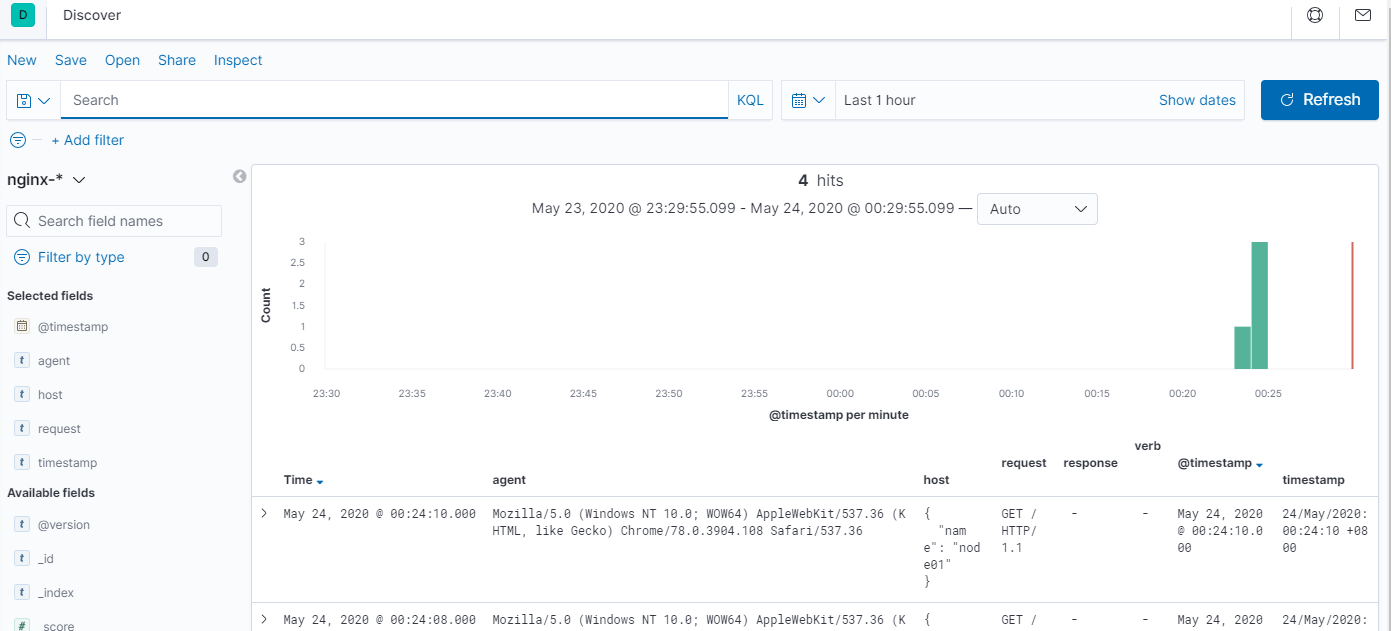

Since the Bro logs would be forwarded to Logstash by Filebeat, the input section of the pipeline uses the beats input plugin. Creating Logstash Inputs, Filters, and Outputs Input Section It is typically used to tail syslog and other types of log files, so I figured it would be a good choice for working with Bro logs. The few guides I found (including one on the Elastic website) assumed Logstash would be running on the same host as Bro, but in my case, I wanted one central ELK server and to have Bro logs forwarded to Logstash and then Elasticsearch.įilebeat is one of the plugins available for monitoring files. Breaking the logs down and parsing them into Elasticsearch increases the usefulness of the data by providing a larger scope of visibility and a way to quickly get a sense of trends and what the network normally looks like. Elasticsearch’s powerful querying capabilites and Kibana’s visualizations are very useful for making sense of the large quantities of data that Bro can produce in a few hours. I recently completed a project in which I wanted to collect and parse Bro logs with ELK stack.

0 kommentar(er)

0 kommentar(er)